Group Relative Policy Optimization Done Right (Dr.GRPO)#

Last updated: Sep 11, 2025

Doc Author: Ziyi ZENG

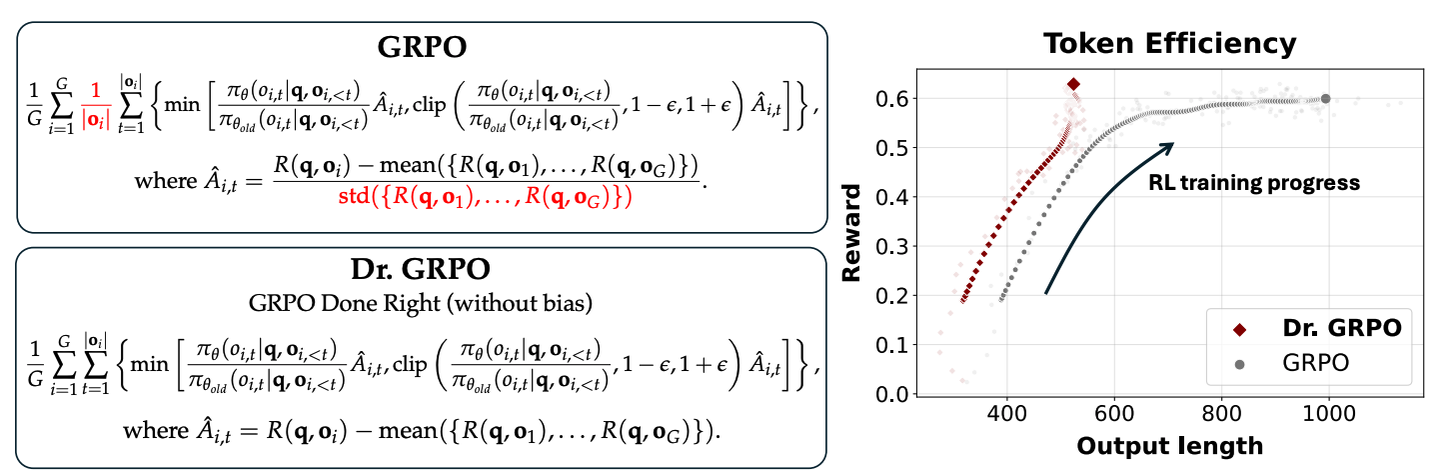

Dr. GRPO is an advanced optimization method introduced to address the limitations of previous reinforcement learning approaches in enhancing the reasoning capabilities of large language models (LLMs). It specifically tackles the issue of optimization bias in Group Relative Policy Optimization (GRPO) that artificially inflates response lengths, especially for incorrect outputs. By improving token efficiency while preserving reasoning performance, Dr. GRPO enables minimalist training recipes to achieve state-of-the-art results, such as 43.3% accuracy on AIME 2024 with a 7B base model.

For more details:

AReal Detail: Paper of AReal

Dr.GRPO Detail: Paper of Dr.GRPO

Algorithm Core Parameters#

We only list the different parameters from GRPO here:

actor.adv_norm.mean_level: The level when calculate the mean of advantage. options:group,batchornone. In dr.GRPO, it is set togroupby default.actor.adv_norm.std_level: The level when calculate the std of advantage. options:group,batchornone. In dr.GRPO, it is set tononeby default.

Example Usage#

The algorithm is experimental and may not be stable.

We recommend to change the parameter within the configuration file (i.e. gsm8k_drgrpo.yaml).

Backend |

CMD |

|---|---|

local |

|

ray |

|

slurm |

|

Baselines#

We still lack baseline, welcome to contribute!